Last Updated: May 1, 2025

A deep dive into the GRU model for load forecasting

Design and artificial intelligence (AI) are increasingly intertwined, not only in creative industries but also in critical infrastructure sectors like energy management. As the world demands more sustainable and efficient energy solutions, machine learning models—like the Gated Recurrent Unit (GRU)—are stepping into pivotal roles.

The Evolving Role of AI in Load Forecasting

AI is no longer just a backend optimization tool—it’s becoming central to decision-making processes. In energy systems, AI models forecast power demand, helping to balance supply, reduce costs, and minimize outages. For instance:

- Time Series Forecasting: Deep learning models predict future loads based on historical consumption patterns.

- Grid Optimization: Real-time AI predictions allow dynamic adjustment of energy generation and distribution.

- Demand Response: Predictive analytics empower utilities to incentivize reduced usage during peak times.

Understanding the GRU: Mathematics and Mechanics

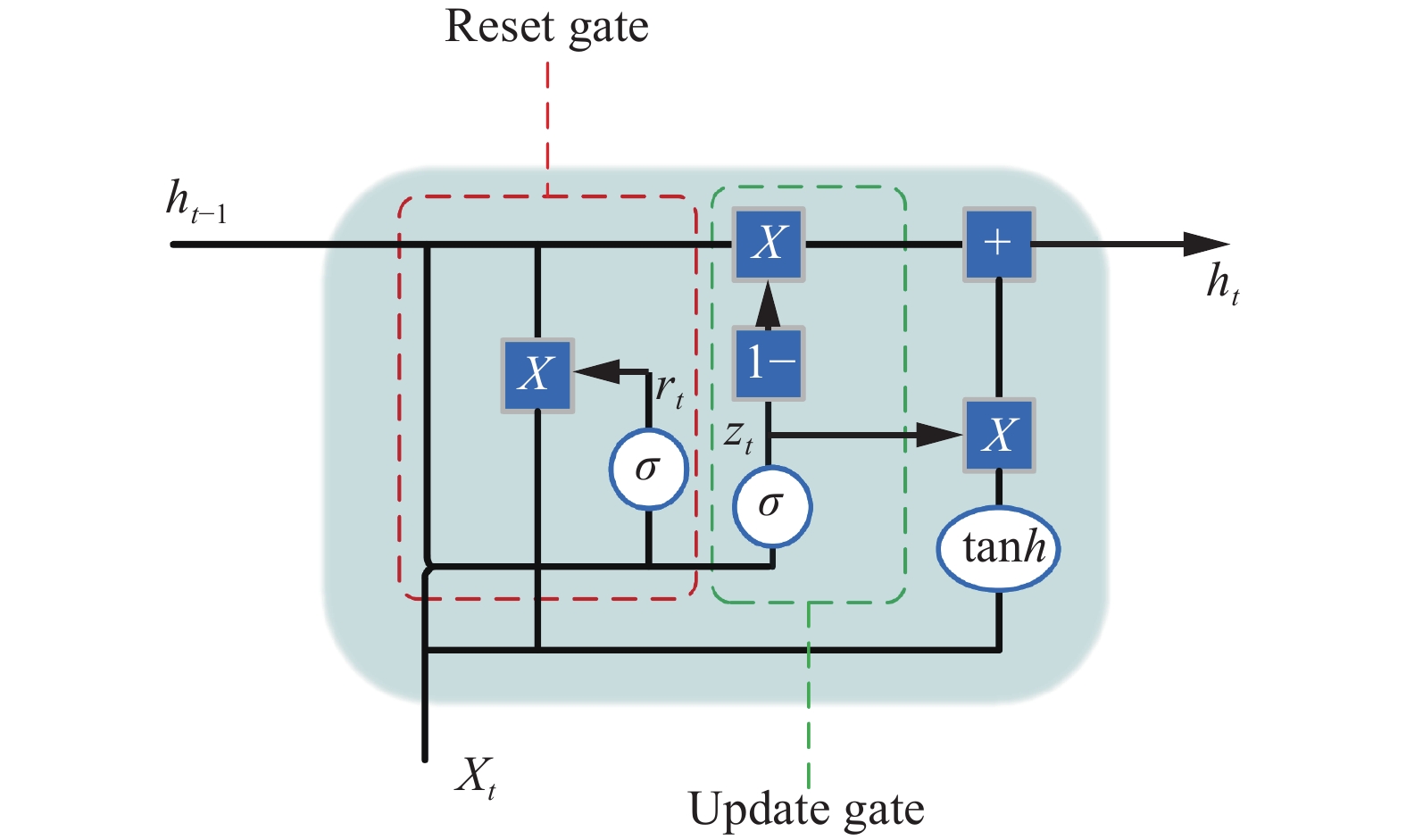

The GRU (Gated Recurrent Unit) is a specialized type of Recurrent Neural Network (RNN) designed to handle sequential data while mitigating the vanishing gradient problem common in standard RNNs. It simplifies the architecture of its predecessor, the LSTM, while maintaining similar performance.

Mathematical Formulation

At each time step , the GRU cell updates its hidden state using two gates:

- Update Gate ()

- Reset Gate ()

The equations are:

where:

- is the sigmoid activation function,

- is the hyperbolic tangent activation,

- denotes element-wise multiplication,

- matrices and vectors are learnable parameters.

Key Intuition:

- The update gate decides how much of the past information to carry forward.

- The reset gate decides how much past information to forget.

- The candidate hidden state brings in the "fresh" memory.

This mechanism allows GRUs to capture long-term dependencies without the computational complexity of LSTMs.

Challenges

- Data Quality: Incomplete or noisy historical load data can mislead the model.

- Hyperparameter Tuning: Choosing the right number of layers, units, learning rates, and batch sizes requires experimentation.

- Overfitting: Small datasets can cause GRUs to memorize rather than generalize.

Opportunities

- Accurate Forecasts: GRUs can model complex temporal patterns, leading to better load predictions.

- Real-Time Prediction: Lightweight GRU architectures allow fast inference, suitable for real-world deployment.

- Scalability: The same GRU model can be retrained for different regions or types of loads.

Design Engineering: The Glue Between Creativity and Execution

Building a high-performing GRU model isn't just about neural networks—it's about crafting a complete design-engineered solution that can be trusted, understood, and maintained.

Key Principles in GRU

- Data Preprocessing: Scaling inputs using MinMaxScaler and handling missing values robustly.

- Model Architecture: Choosing an optimal number of GRU layers and units to balance underfitting and overfitting.

- Loss Function: Using Mean Squared Error (MSE) or Mean Absolute Error (MAE) for regression accuracy.

- Optimization: Employing Adam or RMSProp optimizers for faster convergence.

"All models are wrong, but some are useful." — George Box

Tools Used

In building and deploying the GRU for load forecasting, several tools were essential:

- Python with TensorFlow/Keras: Core libraries for building the GRU model.

- Pandas and NumPy: Handling time series data and feature engineering.

- Matplotlib and Seaborn: Visualizing variations (seasonal) like training curves and prediction vs actual plots.

- Scikit-Learn: For min-max scaling.

Why GRU

The reasons:

- Simpler Architecture → Faster Training.

- Better Generalization on Medium-Sized Datasets

- Fewer Computational Resources

- Empirical Results on Time Series Tasks

- Simpler Tuning

Case Study: My GRU-Based Load Forecasting Project

For my project:

- I collected hourly load data from Kaggle dataset named Panama Case Study.

- Performed feature engineering (reducing it to useful data).

- Built multiple-layers GRU model.

- Achieved a RMSE of 63.79, MAE of 6.95, and R2 Score of 0.84.

- The model's predictions closely tracked the actual load curves, especially capturing daily and weekly seasonality patterns.

Conclusion

The intersection of AI, energy systems, and engineering design is opening exciting frontiers. By combining GRUs' power with thoughtful system design, we can build tools that not only predict the future but help shape it sustainably and intelligently. In the coming years, the winners will be those who blend technical mastery with practical application.

The future of load forecasting—and AI generally—is collaborative: human ingenuity amplified by machine intelligence.

Food for thought

- Could hybrid models—combining GRUs with traditional time series methods (like ARIMA)—offer even better forecasting accuracy?

- Could a hybrid model combining GRUs with attention layers deliver a better balance between computational efficiency and forecasting accuracy? (Just working on this 😅)